Doh! Forgot earlier in the night, so here you are… technically Saturday.

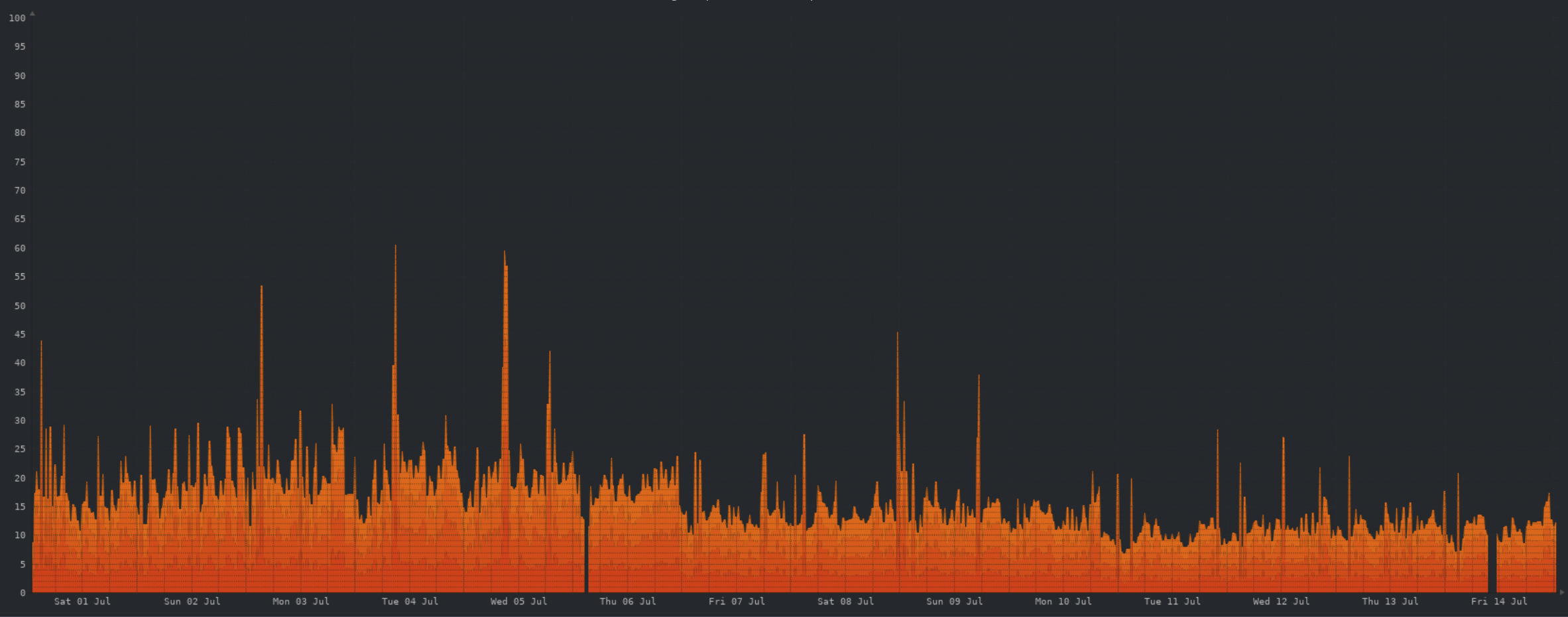

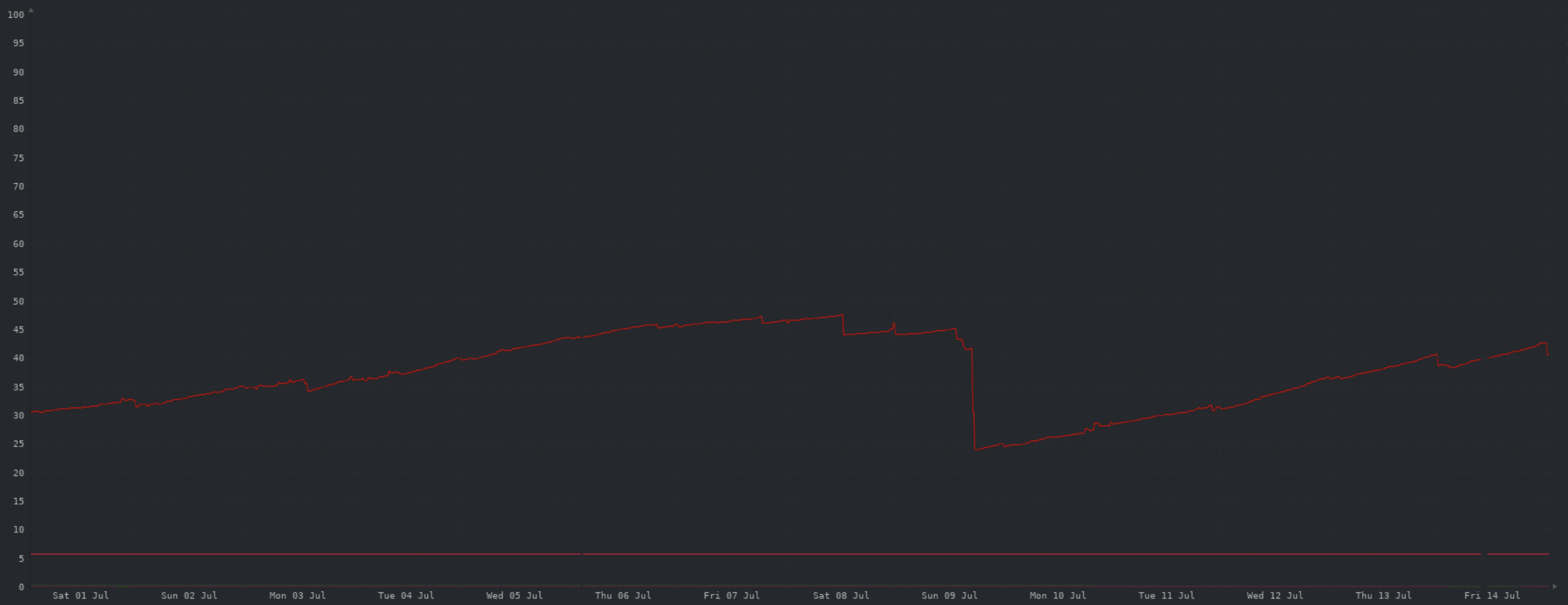

CPU:

The lemmy devs have made some major strides in improving performance recently, as you can see by the overall reduced CPU load.

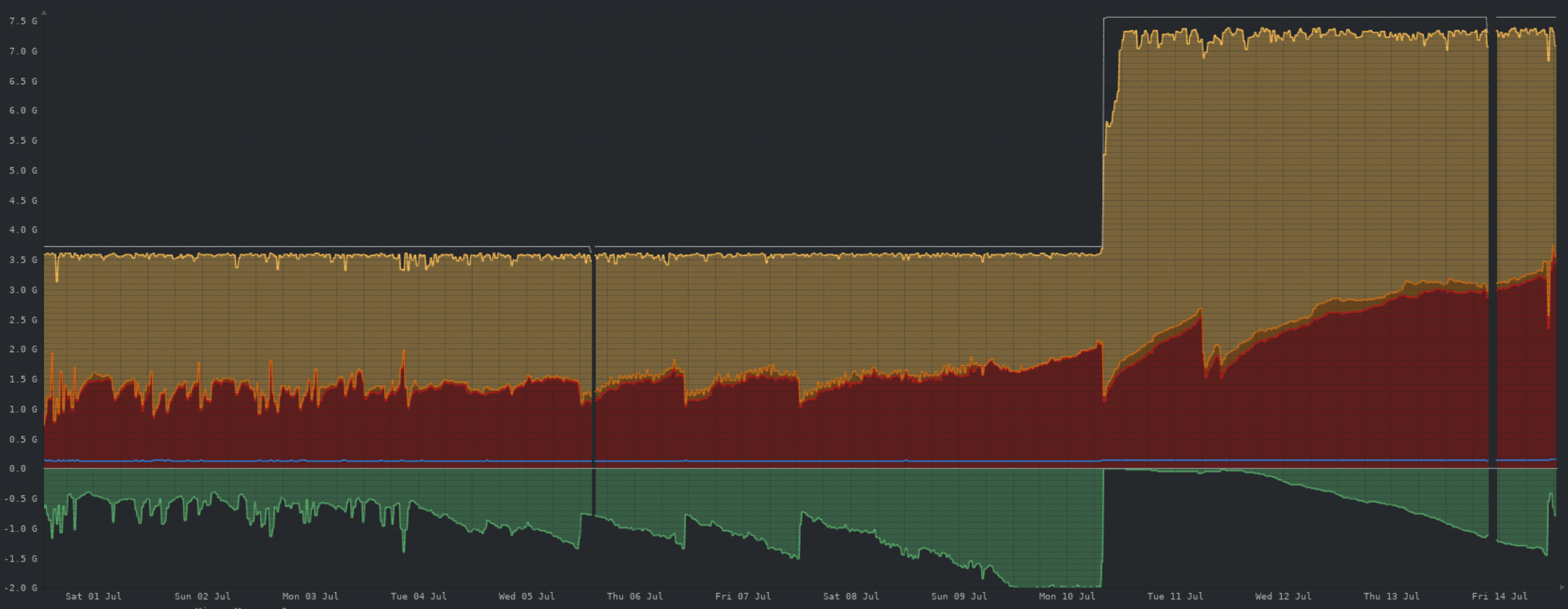

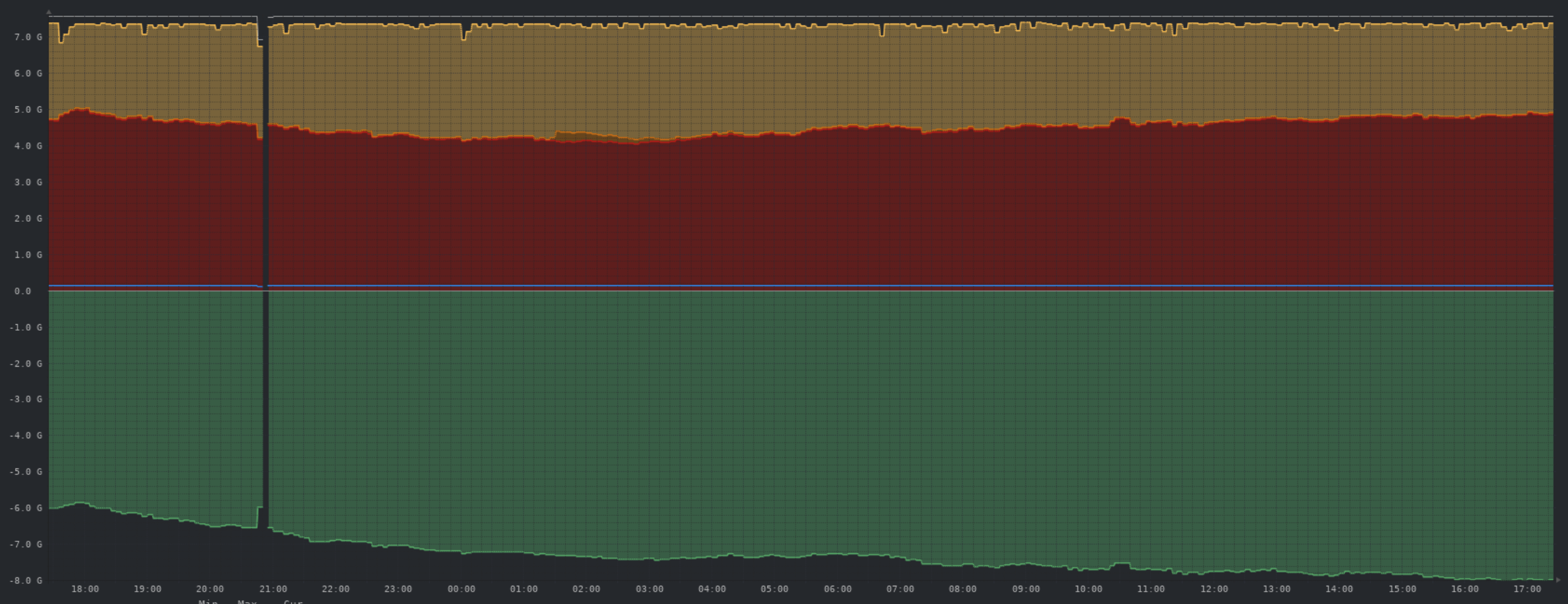

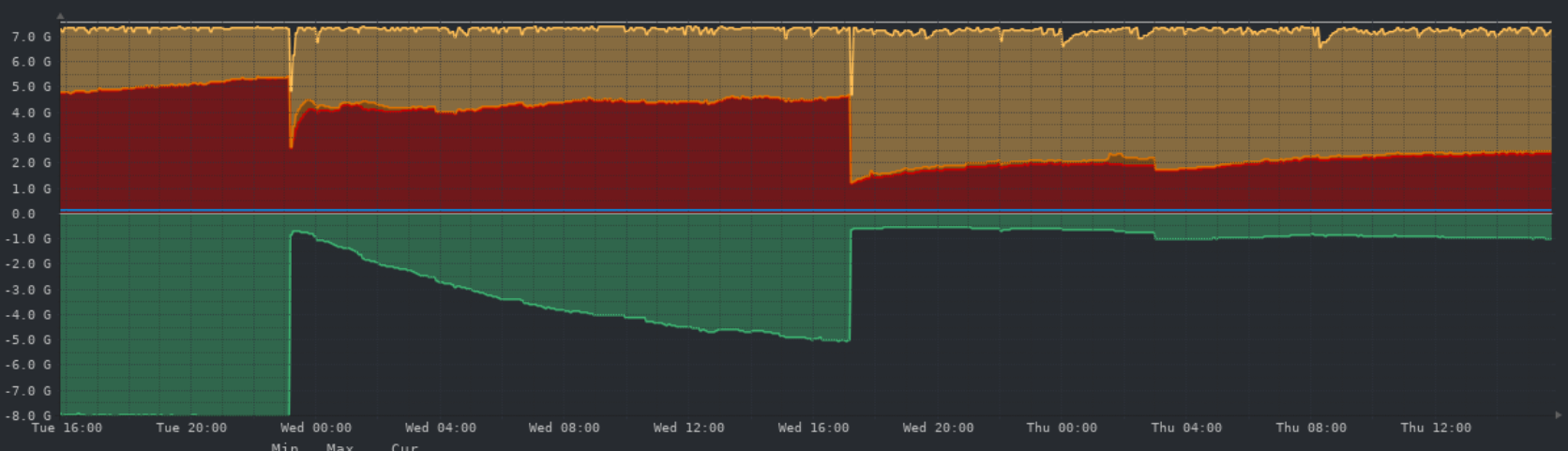

Memory:

I need to figure out why swap is continuing to be used, when there is cache/buffer available to be used. But as you can see, the upgrade to 8GB of RAM is being put to good use.

Network:

The two large spikes here are from some backups being uploaded to object storage. Apart from that, traffic levels are fine.

Storage:

A HUGE win here this week, turns out a huge portion of the database is data we don’t need, and can be safely deleted pretty much any time. The large drop in storage on the 9th was from me manually deleting all but the most recent ~100k rows in the guilty table. Devs are aware of this issue, and are actively working on making DB storage more efficient. While a better fix is being worked on, I have a cronjob running every hour to delete all but the most recent 200k rows.

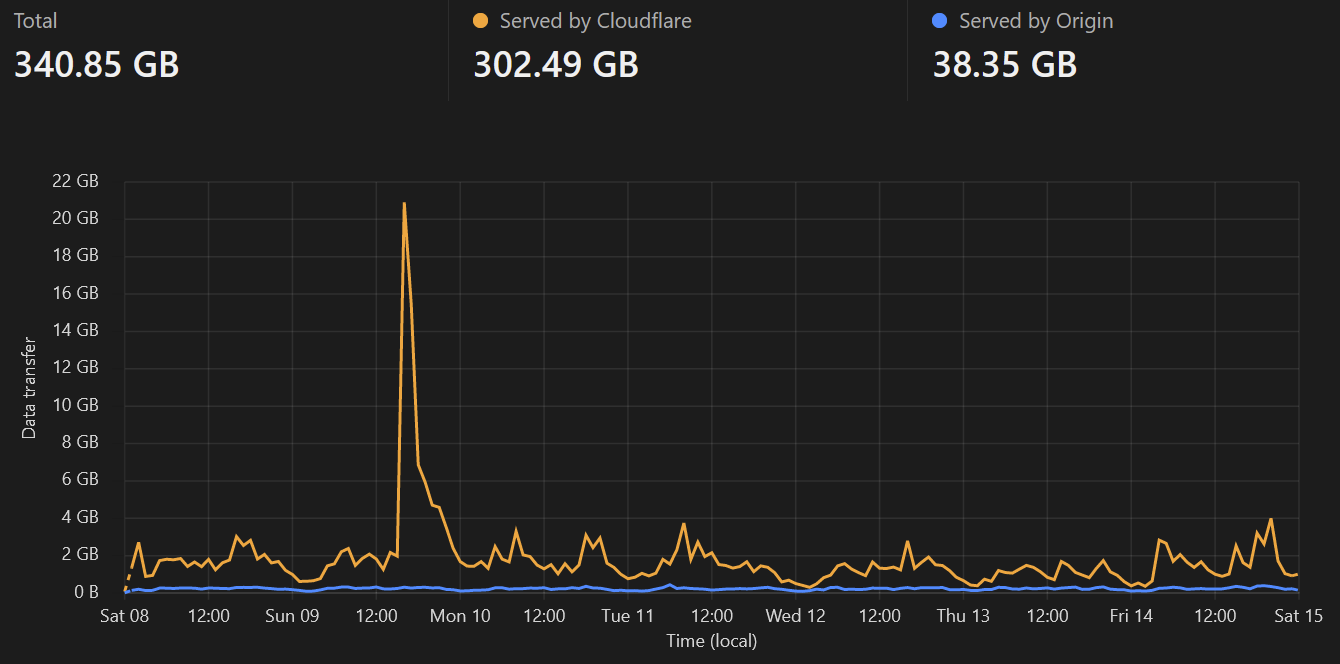

Cloudflare caching:

Cloudflare still saving us substantial egress traffic from the VPS, though no 14MB “icons” being grabbed thousands of times this week 😀

Summary:

All things considered, we’re in a much better place today than a week ago. Storage is much less of a concern, and all other server resources are doing well… though I need to investigate swap usage.

Longer term it still looks as though storage will become the trigger for further upgrades. However storage growth will be much more slow and under our control. The recent upward trend is predominantly from locally cached images from object storage, which can be deleted at any time as required.

As usual, feel free to ask questions.

without memory pressure only inactive and pages without write activity pages are moving to swap. It would be interesting to see memory stats for Lemmy itself. I expect that rest of the stack should be pretty much memory-leak free.

I don’t think I’ve ever seen a host exhaust swap before RAM is full…

Looks to be postgres related. I’ve changed a couple of settings, lets see if it happens again.

A much more normal memory usage profile now

What settings did you change? Having a few memory issues myself.

What are you using to transfer to Wasabi? I’ve tried using s3fs for a ~2TB Nextcloud backup and it is sloooowwww. I think I max out at 2MB/sec… and that’s on a Xeon E3v5 with 32GB of RAM and a 1Gbps connection so it I can’t imagine its CPU, RAM, or network that’s the slow down.

Also tried sshfs to a local server here in the office, and that was similar speed. I think it’s anything FUSE based.

Haven’t worked out the logistics of handling my backup just yet since I have 2x2TB drives in RAID1, and a data directory that’s 1.5TB… No room for storing a backup locally first to use something faster like rclone. Could rclone directly but then nothing is encrypted and there’s some confidential work related data on there. As well as my personal portfolio of dick pics.

S3fs, but it’s only images as required, not large ongoing transfers. Have uploaded multi GB backups with s3fs and monitoring showed gigabit speeds.

Latency is a major factor. The server is sub 1ms to the Wasabi endpoint. Higher latency will negatively impact transfers that involves lots of files. Small numbers of large files should be fine.

Just one question, why can’t we see any nsfw content ? Is aussie.zone not federated?

Yes it’s federated but NSFW is blocked.

Any chance you’ll unblock NSFW in the future ?

No, the legal risks are too high for me personally and the instance as a whole.

I understand, thank you :)

How come, out of interest? There’s already plenty of porn on the internet.

True, I was thinking all instances could talk to each other. More people can interact with all the content that Fediverse has to offer.