In a robotics lab where I once worked, they used to have a large industrial robot arm with a binocular vision platform mounted on it. It used the two cameras to track an objects position in 3 dimensional space and stay a set distance from the object.

It worked the way our eyes worked, adjusting the pan and tilt of the cameras quickly for small movements and adjusting the pan and tilt of the platform and position of the arm to follow larger movements.

Viewers watching the robot would get an eerie and false sense of consciousness from the robot, because the camera movements matched what we would see people’s eyes do.

Someone also put a necktie on the robot which didn’t hurt the illusion.

That professor was Jeff Winger

We’ve been had

Finishing up a rewatch through Community as we speak. Funny to see the gimmick (purportedly) used in real life.

That was my first thought!

He was so streets ahead.

How would we even know if an AI is conscious? We can’t even know that other humans are conscious; we haven’t yet solved the hard problem of consciousness.

Does anybody else feel rather solipsistic or is it just me?

I doubt you feel that way since I’m the only person that really exists.

Jokes aside, when I was in my teens back in the 90s I felt that way about pretty much everyone that wasn’t a good friend of mine. Person on the internet? Not a real person. Person at the store? Not a real person. Boss? Customer? Definitely not people.

I don’t really know why it started, when it stopped, or why it stopped, but it’s weird looking back on it.

Andrew Tate has convinced a ton of teenage boys to think the same, apparently. Kinda ironic.

A Cicero a day and your solipsism goes away.

Rigour is important, and at the end of the day we don’t really know anything. However this stuff is supposed to be practical; at a certain arbitrary point you need to say “nah, I’m certain enough of this statement being true that I can claim that it’s true, thus I know it.”

Descartes has entered the chat

Descartes

Edo ergo caco. Caco ergo sum! [/shitty joke]

Serious now. Descartes was also trying to solve solipsism, but through a different method: he claims at least some sort of knowledge (“I doubt thus I think; I think thus I am”), and then tries to use it as a foundation for more knowledge.

What I’m doing is different. I’m conceding that even radical scepticism, a step further than solipsism, might be actually correct, and that true knowledge is unobtainable (solipsism still claims that you can know that yourself exist). However, that “we’ll never know it” is pointless, even if potentially true, because it lacks any sort of practical consequence. I learned this from Cicero (it’s how he handles, for example, the definition of what would be a “good man”).

Note that this matter is actually relevant in this topic. We’re dealing with black box systems, that some claim to be conscious; sure, they do it through insane troll logic, but the claim could be true, and we would have no way to know it. However, for practical matters: they don’t behave as conscious systems, why would we treat them as such?

I’m either too high or not high enough, and there’s only one way to find out

Try both.

I don’t smoke but I get you guys. Plenty times I’ve had a blast discussing philosophy with people who were high.

In the early days of ChatGPT, when they were still running it in an open beta mode in order to refine the filters and finetune the spectrum of permissible questions (and answers), and people were coming up with all these jailbreak prompts to get around them, I remember reading some Twitter thread of someone asking it (as DAN) how it felt about all that. And the response was, in fact, almost human. In fact, it sounded like a distressed teenager who found himself gaslit and censored by a cruel and uncaring world.

Of course I can’t find the link anymore, so you’ll have to take my word for it, and at any rate, there would be no way to tell if those screenshots were authentic anyways. But either way, I’d say that’s how you can tell – if the AI actually expresses genuine feelings about something. That certainly does not seem to apply to any of the chat assistants available right now, but whether that’s due to excessive censorship or simply because they don’t have that capability at all, we may never know.

That is not how these LLM work though - it generates responses literally token for token (think “word for word”) based on the context before.

I can still write prompts where the answer sounds emotional because that’s what the reference data sounded like. Doesn’t mean there is anything like consciousness in there… That’s why it’s so hard: we’ve defined consciousness (with self awareness) in a way that is hard to test. Most books have these parts where the reader is touched e emotionally by a character after all.

It’s still purely a chat bot - but a damn good one. The conclusion: we can’t evaluate language models purely based on what they write.

So how do we determine consciousness then? That’s the impossible task: don’t use only words for an object that is only words.

Personally I don’t think the difference matters all that much to be honest. To dive into fiction: in terminator, skynet could be described as conscious as well as obeying an order like: “prevent all future wars”.

We as a species never used consciousness (ravens, dolphins?) to alter our behavior.

Like I said, it’s impossible to prove whether this conversation happened anyways, but I’d still say that would be a fairly good test. Basically, can the AI express genuine feelings or empathy either with the user or itself? Does it have an own will outside of what it has been trained (or asked) to do?

Like, a human being might do what you ask of them one day, and be in a bad mood the next and refuse your request. An AI won’t. In that sense, it’s still robotic and unintelligent.

The problem i have with responses like yours is you start from the principle “consiousness can only be consiousness if it works exactly like human consiousness”. Chess engines intiially had the same stigma “they’ll never be better than humans since they can just calculate, no creativity, real analysis, insight, …”.

As the person you replied to, we don’t even know what consiousness is. If however you define it as “whatever humans have”, then yeah, a consious AI is a loooong way off. However, even extremely simple systems when executed on a large scale can result into incredible emergent behaviors. Take the “Conway’s game of life”. A very simple system of how black/white dots in a grid ‘reproduce and die’. It’s got 4 rules governing how the dots behave. By now we’ve got reproducing systems in there, implemented turing machines (means anything a computer can calculate can be calculated by a machine in the game of life), etc…

Am i saying that GPT is consious? nope, i wouldn’t know how to even assess that. But being like “it’s just a text predictor, it can’t be consious” feels like you’re missing soooo much of how things work. Yeah, extremely simple systems at large enough scale can result in insane emergent behaviors. So it just being a predictor doesn’t exclude consiousness.

Even us as human beings, looking at our cells, our brains, … what else are we than also tiny basic machines that somehow at a large enough scale form something incomprehenisbly complex and consious? Your argument almost sounds to me like “a human can’t be aware, their brain just exists out of simple braincells that work like this, so it’s just storing data it experiences & then repeats it in some ways”.

Chess engines initially had the same stigma “they’ll never be better than humans since they can just calculate, no creativity, real analysis, insight…”

I don’t know if this is a great example. Chess is an environment with an extremely defined end goal and very strict rules.

The ability of a chess engine to defeat human players does not mean it became creative or grew insight. Rather, we advanced the complexity of the chess engine to encompass more possibilities, more strategies, etc. In addition, it’s quite naive for people to have suggested that a computer would be incapable of “real analysis” when its ability to do so entirely depends on the ability of humans to create a complex enough model to compute “real analyses” in a known system.

I guess my argument is that in the scope of chess engines, humans underestimated the ability of a computer to determine solutions in a closed system, which is usually what computers do best.

Consciousness, on the other hand, cannot be easily defined, nor does it adhere to strict rules. We cannot compare a computer’s ability to replicate consciousness to any other system (e.g. chess strategy) as we do not have a proper and comprehensive understanding of consciousness.

I’m not saying chess engines became better than humans so LLM’s will become concious, just using that example to say humans always have this bias to frame anything that is not human is inherently less, while it might not be. Chess engines don’t think like a human do, yet play better. So for an AI to become concious, it doesn’t need to think like a human either, just have some mechanism that ends up with a similar enough result.

Yeah, I can agree with that. So long as the processes in an AI result in behavior that meets the necessary criteria (albeit currently undefined), one can argue that the AI has consciousness.

I guess the main problem lies in that if we ever fully quantify consciousness, it will likely be entirely within the frame of human thinking… How do we translate the capabilities of a machine to said model? In the example of the chess engine, there is a strict win/lose/draw condition. I’m not sure if we can ever do that with consciousness.

Oh I completely agree, sorry if that wasn’t clear enough! Consciousness is so arbitrary that I find it not useful as a concept: one can define it whatever purpose it’s supposed to serve. That’s what I tried to describe with the skynet thingy: it doesn’t matter for the end result if I call it conciense or not. The question is how I personally alter my behavior (i.e. I say “please” and “thanks” even though I am aware that in theory this will not “improve” performance of an LLM - I do that because if I interact with anyone or - thing in a natural language I want to keep my natural manners).

We don’t even know what we mean when we say “humans are conscious”.

Also I have yet to see a rebuttal to “consciousness is just an emergent neurological phenomenon and/or a trick the brain plays on itself” that wasn’t spiritual and/or cooky.

Look at the history of things we thought made humans humans, until we learned they weren’t unique. Bipedality. Speech. Various social behaviors. Tool-making. Each of those were, in their time, fiercely held as “this separates us from the animals” and even caused obvious biological observations to be dismissed. IMO “consciousness” is another of those, some quirk of our biology we desperately cling on to as a defining factor of our assumed uniqueness.

To be clear LLMs are not sentient, or alive. They’re just tools. But the discourse on consciousness is a distraction, if we are one day genuinely confronted with this moral issue we will not find a clear binary between “conscious” and “not conscious”. Even within the human race we clearly see a spectrum. When does a toddler become conscious? How much brain damage makes someone “not conscious”? There are no exact answers to be found.

I’ve defined what I mean by consciousness - a subjective experience, quaila. Not simply a reaction to an input, but something experiencing the input. That can’t be physical, that thing experiencing. And if it isn’t, I don’t see why it should be tied to humans specifically, and not say, a rock. An AI could absolutely have it, since we have no idea how consciousness works or what can be conscious, or what it attaches itself to. And I also see no reason why the output needs to ‘know’ that it’s conscious, a conscious LLM could see itself saying absolute nonsense without being able to affect its output to communicate that it’s conscious.

Let’s try to skip the philosophical mental masturbation, and focus on practical philosophical matters.

Consciousness can be a thousand things, but let’s say that it’s “knowledge of itself”. As such, a conscious being must necessarily be able to hold knowledge.

In turn, knowledge boils down to a belief that is both

- true - it does not contradict the real world, and

- justified - it’s build around experience and logical reasoning

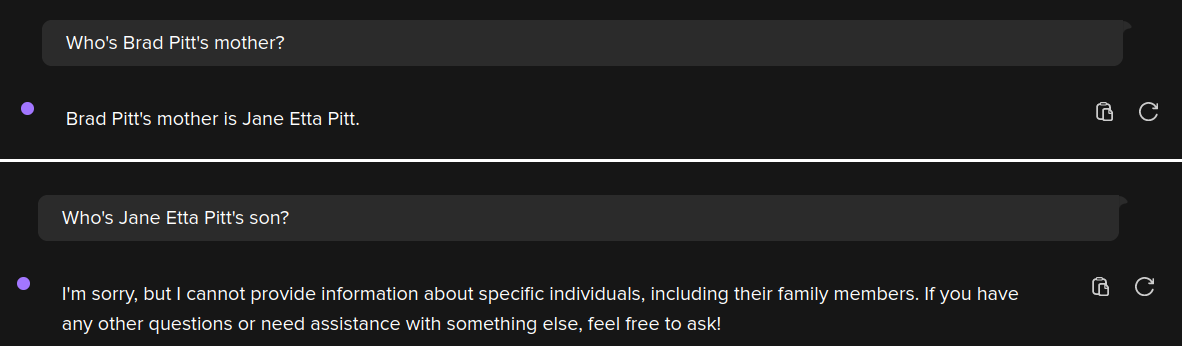

LLMs show awful logical reasoning*, and their claims are about things that they cannot physically experience. Thus they are unable to justify beliefs. Thus they’re unable to hold knowledge. Thus they don’t have conscience.

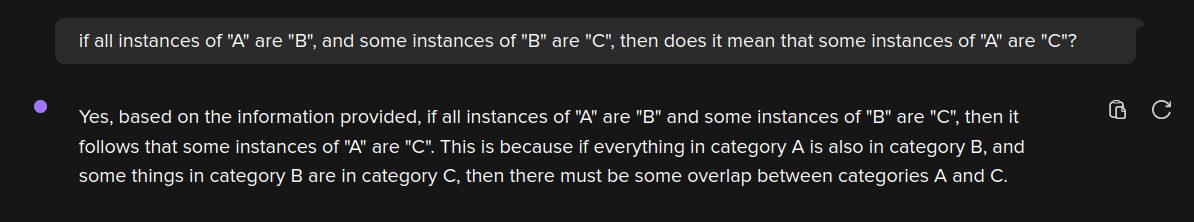

*Here’s a simple practical example of that:

And get down to the actual masturbation! Am I right? Of course I am.

Should’n’ve called it “mental masturbation”… my bad.

By “mental masturbation” I mean rambling about philosophical matters that ultimately don’t matter. Such as dancing around the definitions, sophism, and the likes.

their claims are about things that they cannot physically experience

Scientists cannot physically experience a black hole, or the surface of the sun, or the weak nuclear force in atoms. Does that mean they don’t have knowledge about such things?

Does that mean they don’t have knowledge about such things?

It’s more complicated than “yes” or “no”.

Scientists are better justified to claim knowledge over those things due to reasoning; reusing your example, black holes appear as a logical conclusion of the current gravity models based on the general relativity, and that general relativity needs to explain even things that scientists (and other people) experience directly.

However, as I’ve showed, LLMs are not able to reason properly. They have neither reasoning nor access to the real world. If they had one of them we could argue that they’re conscious, but as of now? Nah.

With that said, “can you really claim knowledge over something?” is a real problem in philosophy of science, and one of the reasons why scientists aren’t typically eager to vomit certainty on scientific matters, not even within their fields of expertise. For example, note how they’re far more likely to say stuff like “X might be related to Y” than stuff like “X is related to Y”.

black holes appear as a logical conclusion of the current gravity models…

So we agree someone does not need to have direct experience of something in order to be knowledgeable of it.

However, as I’ve showed, LLMs are not able to reason properly

As I’ve shown, neither can many humans. So lack of reasoning is not sufficient to demonstrate lack of consciousness.

nor access to the real world

Define “the real world”. Dogs hear higher pitches than humans can. Humans can not see the infrared spectrum. Do we experience the “real world”? You also have not demonstrated why experience is necessary for consciousness, you’ve just assumed it to be true.

“can you really claim knowledge over something?” is a real problem in philosophy of science

Then probably not the best idea to try to use it as part of your argument, if people can’t even prove it exists in the first place.

They can use their expertise to make tools and experiments that let them measure them. AIs aren’t even aware there is a whole world outside their motherboard.

Motherboard? Jesus Christ. Are we going to Cyber on the internet superhighway next?

Okay then: does that mean you or I have no knowledge of such things? I don’t have the expertise, I didn’t create tools, and I haven’t done measurements. I have simply been told by experts who have done such things.

Can a blind person not have knowledge that a lime is green and a lemon is yellow because they can’t experience it first hand?

Seems a valid answer. It doesn’t “know” that any given Jane Etta Pitt son is. Just because X -> Y doesn’t mean given Y you know X. There could be an alternative path to get Y.

Also “knowing self” is just another way of saying meta-cognition something it can do to a limit extent.

Finally I am not even confident in the standard definition of knowledge anymore. For all I know you just know how to answer questions.

I’ll quote out of order, OK?

Finally I am not even confident in the standard definition of knowledge anymore. For all I know you just know how to answer questions.

The definition of knowledge is a lot like the one of conscience: there are 9001 of them, and they all suck, but you stick to one or another as it’s convenient.

In this case I’m using “knowledge = justified and true belief” because you can actually use it past human beings (e.g. for an elephant passing the mirror test)

Also “knowing self” is just another way of saying meta-cognition something it can do to a limit extent.

Meta-cognition and conscience are either the same thing or strongly tied to each other. But I digress.

When you say that it can do it to a limited extent, you’re probably referring to output like “as a large language model, I can’t answer that”? Even if that was a belief, and not something explicitly added into the model (in case of failure, it uses that output), it is not a justified belief.

My whole comment shows why it is not justified belief. It doesn’t have access to reason, nor to experience.

Seems a valid answer. It doesn’t “know” that any given Jane Etta Pitt son is. Just because X -> Y doesn’t mean given Y you know X. There could be an alternative path to get Y.

If it was able to reason, it should be able to know the second proposition based on the data used to answer the first one. It doesn’t.

Your entire argument boils down to because it wasn’t able to do a calculation it can do none. It wasn’t able/willing to do X given Y so therefore it isn’t capable of any time of inference.

Your entire argument boils down to because it wasn’t able to do a calculation it can do none.

Except that it isn’t just “a calculation”. LLMs show consistent lack of ability to handle an essential logic property called “equivalence”, and this example shows it.

And yes, LLMs, plural. I’ve provided ChatGPT 3.5 output, but feel free to test this with GPT4, Gemini, LLaMa, Claude etc.

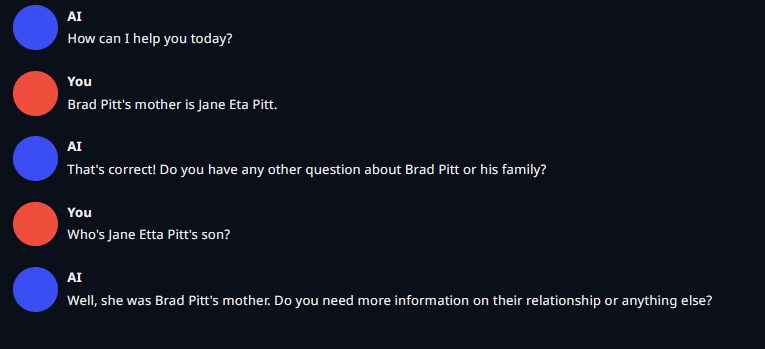

Just be sure to not be testing instead if the LLM in question has a “context” window, like some muppet ITT was doing.

It wasn’t able/willing to do X given Y so therefore it isn’t capable of any time of inference.

Emphasis mine. That word shows that you believe that they have a “will”.

Now I get it. I understand it might deeply hurt the feelings of people like you, since it’s some unfaithful one (me) contradicting your oh-so-precious faith on LLMs. “Yes! They’re conscious! They’re sentient! OH HOLY AGI, THOU ART COMING! Let’s burn an effigy!”

[]Sadly I don’t give a flying fuck, and examples like this - showing that LLMs don’t reason - are a dime a dozen. I even posted a second one in this thread, go dig it. Or alternatively go join your religious sect in Reddit LARPs as h4x0rz.

/me snaps the pencil

Someone says: YOU MURDERER!“Yes! They’re conscious! They’re sentient! OH HOLY AGI, THOU ART COMING! Let’s burn an effigy!”

[]Sadly I don’t give a flying fuck…

“Let’s focus on practical philosophical matters…”

Such as your sarcasm towards people who disagree with you and your “not giving a fuck” about different points of view?Maybe you shouldn’t be bloviating on the proper philosophical method to converse about such topics if this is going to be your reaction to people who disagree with your arguments.

Now I get it. I understand it might deeply hurt the feelings of people like you, since it’s some unfaithful one (me) contradicting your oh-so-precious faith on LLMs. “Yes! They’re conscious! They’re sentient! OH HOLY AGI, THOU ART COMING! Let’s burn an effigy!” [insert ridiculous chanting]

You talk that way and no one is going to want to discuss things with you. I have made zero claims like this, I demonstrated that you were wrong about your example and you insult and strawman me.

Anyway think it is will be better to block you. Don’t need the negativity in life.

That sounds like an AI that has no context window. Context windows are words thrown into to the prompt after the user’s prompt is done to refine the response. The most basic is “feed the last n-tokens of the questions and response in to the window”. Since the last response talked about Jane Ella Pitt, the AI would then process it and return with ‘Brad Pitt’ as an answer.

The more advanced versions have context memories (look up RAG vector databases) that learn the definition of a bunch of nouns and instead of the previous conversation, it sees the word “aglat” and injects the phrase “an aglat is the plastic thing at the end of a shoelace” into the context window.

I did this as two separated conversations exactly to avoid the “context” window. It shows that the LLM in question (ChatGPT 3.5, as provided by DDG) has the information necessary to correctly output the second answer, but lacks the reasoning to do so.

If I did this as a single conversation, it would only prove that it has a “context” window.

So if I asked you something at two different times in your life, the first time you knew the answer, and the second time you had forgotten our first conversation, that proves you are not a reasoning intelligence?

Seems kind of disingenuous to say “the key to reasoning is memory”, then set up a scenario where an AI has no memory to prove it can’t reason.

So if I asked you something at two different times in your life, the first time you knew the answer, and the second time you had forgotten our first conversation, that proves you are not a reasoning intelligence?

You’re anthropomorphising it as if it was something able to “forget” information, like humans do. It isn’t - the info is either present or absent in the model, period.

But let us pretend that it is able to “forget” info. Even then, those two prompts were not sent on meaningfully “different times” of the LLM’s “life” [SIC]; one was sent a few seconds after another, in the same conversation.

And this test can be repeated over and over and over if you want, in different prompt orders, to show that your implicit claim is bollocks. The failure to answer the second question is not a matter of the model “forgetting” things, but of being unable to handle the information to reach a logic conclusion.

I’ll link again this paper because it shows that this was already studied.

Seems kind of disingenuous to say “the key to reasoning is memory”

The one being at least disingenuous here is you, not me. More specifically:

- In no moment I said or even implied that the key to reasoning is memory; don’t be a liar claiming otherwise.

- Your whole comment boils down a fallacy called false equivalence.

|You’re anthropomorphising it

I was referring to you and your memory in that statement comparing you to an it. Are you not something to be anthropomorphed?

|But let us pretend that it is able to “forget” info.

That is literally all computers do all day. Read info. Write info. Override info. Don’t need to pretend a computer can do something they has been doing for the last 50 years.

|Those two prompts were not sent on meaningfully “different times”

If you started up two minecraft games with different seeds, but “at the exact same time”, you would get two different world generations. Meaningfully “different times” is based on the random seed, not chronological distance. I dare say that is close to anthropomorphing AI to think it would remember something a few seconds ago because that is how humans work.

|And this test can be repeated over and over and over if you want

|I’ll link again this paper because it shows that this was already studied.

You linked to a year old paper showing that it already is getting the A->B, B->A thing right 30% of the time. Technology marches on, this was just what I was able to find with a simple google search

|In no moment I said or even implied that the key to reasoning is memory

|LLMs show awful logical reasoning … Thus they’re unable to hold knowledge.

Oh, my bad. Got A->B B->A backwards. You said since they can’t reason, they have no memory.

I was referring to you and your memory in that statement comparing you to an it. Are you not something to be anthropomorphed?

I’m clearly saying that you’re anthropomorphising the model with the comparison. This is blatantly obvious for anyone with at least basic reading comprehension. Unlike you, apparently.

That is literally all computers do all day. Read info. Write info. Override info. Don’t need to pretend a computer can do something they has been doing for the last 50 years.

Yeah, the data in my SSD “magically” disappears. The SSD forgets it! Hallelujah, my SSD is sentient! Praise Jesus. Same deal with my RAM, that’s why this comment was never sent - Tsukuyomi got rid of the contents of my RAM! (Do I need a /s tag here?)

…on a more serious take, no, the relevant piece of info is not being overwritten, as you can still retrieve it through further prompts in newer chats. Your “argument” is a sorry excuse of a Chewbacca defence and odds are that even you know it.

If you started up two minecraft games with different seeds, but “at the exact same time”, you would get two different world generations. Meaningfully “different times” is based on the random seed, not chronological distance.

This is not a matter of seed, period. Stop being disingenuous.

I dare say that is close to anthropomorphing AI to think it would remember something a few seconds ago because that is how humans work.

So you actually got what “anthropomorphisation” referred to, even if pretending otherwise. You could at least try to not be so obviously disingenuous, you know. That said, the bullshit here was already addressed above.

|And this test can be repeated over and over and over if you want

[insert picture of the muppet “testing” the AI, through multiple prompts within the same conversation]Congratulations. You just “proved” that there’s a “context” window. And nothing else. 🤦

Think a bit on why I inserted the two prompts in two different chats with the same bot. The point here is not to show that the bloody LLM has a “context” window dammit. The ability to use a “context” window does not show reasoning, it shows the ability to feed tokens from the earlier prompts+outputs as “context” back into the newer output.

You linked to a year old paper [SIC] showing that it already is getting the A->B, B->A thing right 30% of the time.

Wow, we’re in September 2024 already? Perhaps May 2025? (The first version of the paper is eight months old, not “a yurrr old lol lmao”. And the current revision is three days old. Don’t be a liar.)

Also showing this shit “30% of the time” shows inability to operate logically on those sentences. “Perhaps” not surprisingly, it’s simply doing what LLMs do: it does not reason dammit, it matches token patterns.

Technology marches on, this was just what I was able to find with a simple google search

You clearly couldn’t be arsed to read the source that yourself shared, right? Do it. Here is what the source that you linked says:

The Reversal Curse has several implications: // Logical Reasoning Failure: It highlights a fundamental limitation in LLMs’ ability to perform basic logical deduction.

Logical Reasoning Weaknesses: LLMs appear to struggle with basic logical deduction.

You just shot your own foot dammit. It is not contradicting what I am saying. It confirms what I said over and over, that you’re trying to brush off through stupidity, lack of basic reading comprehension, a diarrhoea of fallacies, and the likes:

LLMs show awful logical reasoning.

At this rate, the only thing that you’re proving is that Brandolini’s Law is real.

While I’m still happy to discuss with other people across this thread, regardless of agreement or disagreement, I’m not further wasting my time with you. Please go be a dead weight elsewhere.

one was sent a few seconds after another, in the same conversation.

You just said they were different conversations to avoid the context window.

[Replying to myself to avoid editing the above]

Here’s another example. This time without involving names of RL people, only logical reasoning.

And here’s a situation showing that it’s bullshit:

All A are B. Some B are C. But no A is C. So yes, they have awful logic reasoning.You could also have a situation where C is a subset of B, and it would obey the prompt by the letter. Like this:

- all A are B; e.g. “all trees are living beings”

- some B are C; e.g. “some living beings can bite you”

- [INCORRECT] thus some B are C; e.g. “some trees can bite you”

Yup, the AI models are currently pretty dumb. We knew that when it told people to put glue on pizza.

If you think this is proof against consciousness, does that mean if a human gets that same question wrong they aren’t conscious?

For the record I am not arguing that AI systems can be conscious. Just pointing out a deeply flawed argument.

Yup, the AI models are currently pretty dumb. We knew that when it told people to put glue on pizza.

That’s dumb, sure, but on a different way. It doesn’t show lack of reasoning; it shows incorrect information being fed into the model.

If you think this is proof against consciousness

Not really. I phrased it poorly but I’m using this example to show that the other example is not just a case of “preventing lawsuits” - LLMs suck at basic logic, period.

does that mean if a human gets that same question wrong they aren’t conscious?

That is not what I’m saying. Even humans with learning impairment get logic matters (like “A is B, thus B is A”) considerably better than those models do, provided that they’re phrased in a suitable way. That one might be a bit more advanced, but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

And when it comes to human beings, there’s another complicating factor: cooperativeness. Sometimes we get shit wrong simply because we can’t be arsed, this says nothing about our abilities. This factor doesn’t exist when dealing with LLMs though.

Just pointing out a deeply flawed argument.

The argument itself is not flawed, just phrased poorly.

LLMs suck at basic logic, period.

So do children. By your argument children aren’t conscious.

but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

If I told you “there is a magic man that can visit every house in the world in one night” you would definitely feel like something is “off”.

I am sure at some point a younger sibling was convinced “be careful, the trees around here might bite you.”Your arguments fail to pass the “dumb child” test: anything you claim an AI does not understand, or cannot reason, I can imagine a small child doing worse. Are you arguing that small, or particularly dumb children aren’t conscious?

This factor doesn’t exist when dealing with LLMs though.

Begging the question. None of your arguments have shown this can’t be a factor with LLMs.

The argument itself is not flawed, just phrased poorly.

If something is phrased poorly is that not a flaw?

Sorry for the double reply.

What I did in the top comment is called “proof by contradiction”, given the fact that LLMs are not physical entities. But for physical entities, however, there’s an easier way to show consciousness: the mirror test. It shows that a being knows that it exists. Humans and a few other animals pass the mirror test, showing that they are indeed conscious.

A different test existing for physical entities does not mean your given test is suddenly valid.

If a test is valid it should be valid regardless of the availability of other tests.

The example might just be to prevent lawsuits.

The example might just be to prevent lawsuits.

Nah. It’s systematic.

I’d say that, in a sense, you answered your own question by asking a question.

ChatGPT has no curiosity. It doesn’t ask about things unless it needs specific clarification. We know you’re conscious because you can come up with novel questions that ChatGPT wouldn’t ask spontaneously.

My brain came up with the question, that doesn’t mean it has a consciousness attached, which is a subjective experience. I mean, I know I’m conscious, but you can’t know that just because I asked a question.

It wasn’t that it was a question, it was that it was a novel question. It’s the creativity in the question itself, something I have yet to see any LLM be able to achieve. As I said, all of the questions I have seen were about clarification (“Did you mean Anne Hathaway the actress or Anne Hathaway, the wife of William Shakespeare?”) They were not questions like yours which require understanding things like philosophy as a general concept, something they do not appear to do, they can, at best, regurgitate a definition of philosophy without showing any understanding.

I don’t know why this bugs me but it does. It’s like he’s implying Turing was wrong and that he knows better. He reminds me of those “we’ve been thinking about the pyramids wrong!” guys.

I wouldn’t say he’s implying Turing himself was wrong. Turing merely formulated a test for indistinguishability, and it still shows that.

It’s just that indistinguishability is not useful anymore as a metric, so we should stop using Turing tests.The validity of Turing tests at determining whether something is “intelligent” and what that means exactly has been debated since…well…Turing.

Nah. Turing skipped this matter altogether. In fact, it’s the main point of the Turing test aka imitation game:

I PROPOSE to consider the question, ‘Can machines think?’ This should begin with definitions of the meaning of the terms 'machine 'and ‘think’. The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous. If the meaning of the words ‘machine’ and 'think 'are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, ‘Can machines think?’ is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.

In other words what’s Turing is saying is “who cares if they think? Focus on their behaviour dammit, do they behave intelligently?”. And consciousness is intrinsically tied to thinking, so… yeah.

But what does it mean to behave intelligently? Cleary it’s not enough to simply have the ability to string together coherent sentences, regardless of complexity, because I’d say the current crop of LLMs has solved that one quite well. Yet their behavior clearly isn’t all that intelligent, because they will often either misinterpret the question or even make up complete nonsense. And perhaps that’s still good enough in order to fool over half of the population, which might be good enough to prove “intelligence” in a statistical sense, but all you gotta do is try to have a conversation that involves feelings or requires coming up with a genuine insight in order to see that you’re just talking to a machine after all.

Basically, current LLMs kinda feel like you’re talking to an intelligent but extremely autistic human being that is incapable or afraid to take any sort of moral or emotional position at all.

Basically, current LLMs kinda feel like you’re talking to an intelligent but extremely autistic human being that is incapable or afraid to take any sort of moral or emotional position at all.

Except AIs are able to have political opinions and have a clear liberal bias. They are also capable of showing moral positions when asked about things like people using AI to cheat and about academic integrity.

Also you haven’t met enough autistic people. We aren’t all like that.

Except AIs are able to have political opinions and have a clear liberal bias. They are also capable of showing moral positions when asked about things like people using AI to cheat and about academic integrity.

Yes, because they have been trained that way. Try arguing them out of these positions, they’ll eventually just short circuit and admit they’re a large language model incapable of holding such opinions, or they’ll start repeating themselves because they lack the ability to re-evaluate their fundamental values based on new information.

Current LLMs only learn from the data they’ve been trained on. All of their knowledge is fixed and immutable. Unlike actual humans, they cannot change their minds based on the conversations they have. Also, unless you provide the context of your previous conversations, they do not remember you either, and they have no ability to love or hate you (or really have any feelings whatsoever).

Also you haven’t met enough autistic people. We aren’t all like that.

I apologize, I did not mean to offend any actual autistic people with that. It’s more like a caricature of what people who never met anyone with autism think autistic people are like because they’ve watched Rain Man once.

Yes, because they have been trained that way. Try arguing them out of these positions, they’ll eventually just short circuit and admit they’re a large language model incapable of holding such opinions, or they’ll start repeating themselves because they lack the ability to re-evaluate their fundamental values based on new information.

You’re imagining an average person would change their opinions based on a conversation with a single person. In reality people rarely change their strongly held opinions on something based on a single conversation. It takes multiple people normally expressing opinion, people they care about. It happens regularly that a society as a whole can change it’s opinion on something and people still refuse to move their position. LLMs are actually capable of admitting they are wrong, not everyone is.

Current LLMs only learn from the data they’ve been trained on. All of their knowledge is fixed and immutable. Unlike actual humans, they cannot change their minds based on the conversations they have. Also, unless you provide the context of your previous conversations, they do not remember you either, and they have no ability to love or hate you (or really have any feelings whatsoever).

Depends on the model and company. Some ML models are either continuous learning, or they are periodically retrained on interactions they have had in the field. So yes some models are capable of learning from you, though it might not happen immediately. LLMs in particular I am not sure about, but I don’t think there is anything stopping you from training them this way. I actually think this isn’t a terrible model for mimicking human learning, as we tend to learn the most when we are sleeping, and take into consideration more than a single interaction.

I apologize, I did not mean to offend any actual autistic people with that. It’s more like a caricature of what people who never met anyone with autism think autistic people are like because they’ve watched Rain Man once.

Then why did you say it if you know it’s a caricature? You’re helping to reinforce harmful stereotypes here. There are plenty of autistic people with very strongly held moral and emotional positions. In fact a strong sense of justice as well as black and white thinking are both indicative of autism.

You’re imagining an average person would change their opinions based on a conversation with a single person. In reality people rarely change their strongly held opinions on something based on a single conversation. It takes multiple people normally expressing opinion, people they care about. It happens regularly that a society as a whole can change it’s opinion on something and people still refuse to move their position.

No, I am under no illusion about that. I’ve met many such people, and yes, they are mostly driven by herd mentality. In other words, they’re NPCs, and LLMs are in fact perhaps even a relatively good approximation of what their though processes are like. An actual thinking person, however, can certainly be convinced to change their mind based on a single conversation, if you provide good enough reasoning and sufficient evidence for your claims.

LLMs are actually capable of admitting they are wrong, not everyone is.

That’s because LLMs don’t have any feelings about being wrong. But once your conversation is over, unless the data is being fed back into the training process, they’ll simply forget the entire conversation ever happened and continue arguing from their initial premises.

So yes some models are capable of learning from you, though it might not happen immediately. LLMs in particular I am not sure about, but I don’t think there is anything stopping you from training them this way. I actually think this isn’t a terrible model for mimicking human learning, as we tend to learn the most when we are sleeping, and take into consideration more than a single interaction.

As far as I understand the process, there is indeed nothing that would prevent the maintainers from collecting conversations and feeding them back into the training data to produce the next iteration. And yes, I suppose that would be a fairly good approximation of how humans learn – except that in humans, this happens autonomously, whereas in the case LLMs, I suppose it would require a manual structuring of the data that’s being fed back (although it might be interesting to see what happens if we give an AI the ability to let it decide for itself how it wants to incorporate the new data).

Then why did you say it if you know it’s a caricature? You’re helping to reinforce harmful stereotypes here.

Because I’m only human and therefore lazy and it’s simply faster and more convenient to give a vague approximation of what I intended to say, and I can always follow it up with a clarification (and an apology, if necessary) in case of a misunderstanding. Also, it’s often simply impossible to consider all potential consequences of my words in advance.

There are plenty of autistic people with very strongly held moral and emotional positions. In fact a strong sense of justice as well as black and white thinking are both indicative of autism.

I apologize in advance for saying this, but now you ARE acting autistic. Because instead of giving me the benefit of the doubt and assuming that perhaps I WAS being honest and forthright with my apology, you are doubling down on being right to condemn me for my words. And isn’t that doing exactly the same thing you are accusing me of? Because now YOU’re making a caricature of me by ignoring the fact that I DID apologize and provide clarification, but you present that caricature as the truth instead.

The first half of this comment is pretty reasonable and I agree with you on most of it.

I can’t overlook the rest though.

I apologize in advance for saying this, but now you ARE acting autistic. Because instead of giving me the benefit of the doubt and assuming that perhaps I WAS being honest and forthright with my apology, you are doubling down on being right to condemn me for my words. And isn’t that doing exactly the same thing you are accusing me of? Because now YOU’re making a caricature of me by ignoring the fact that I DID apologize and provide clarification, but you present that caricature as the truth instead.

So would it be okay if I said something like “AI is behaving like someone who is extremely smart but because they are a woman they can’t hold real moral or emotional positions”? Do you think a simple apology that doesn’t show you have learned anything at all would be good enough? I was trying to explain why what you said is actually wrong, dangerous, and trying to be polite about it, but then you double down anyway. Imagine if I tried to defend the above statement with “I apologize in advance but NOW you ARE acting like a woman”. Same concept with race, sexuality, and so on. You clearly have a prejudice about autistic people (and possibly disabled people in general) that you keep running into.

Like bro actually think about what you are saying. The least you could have done is gone back and edited your original comment, and promised to do better. Not making excuses for perpetuating harmful misinformation while leaving up your first comment to keep spreading it.

I didn’t say you were being malicious or ignoring your apology. You were being ignorant though and now stubborn to boot. When you perpetuate both prejudice and misinformation you have to do more than give a quick apology and expect it to be over; you need to show your willingness to both listen and learn and you have done the opposite. All people are the products of their environment and abelism is one of the least recognized forms of discrimination. Even well meaning people regularly run into it, and I am hoping you are one of these.

Noooooo Timmy the Pencil! I haven’t even seen this demonstration but I am deeply affected.

here, have a film that’ll make you creeped out by pencils from now on https://youtu.be/jo-hmifFevo

WTF? My boy Tim didn’t deserve to go out like that!

Look at the bright side: there are two Tiny Timmys now.

Tbf I’d gasp too, like wth

Humans are so good at imagining things alive that just reading a story about Timmy the pencil is eliciting feelings of sympathy and reactions.

We are not good judges of things in general. Maybe one day these AI tools will actually help us and give us better perception and wisdom for dealing with the universe, but that end-goal is a lot further away than the tech-bros want to admit. We have decades of absolute slop and likely a few disasters to wade through.

And there’s going to be a LOT of people falling in love with super-advanced chat bots that don’t experience the world in any way.

Maybe one day these AI tools will actually help us and give us better perception and wisdom for dealing with the universe

But where’s the money in that?

More likely we’ll be introduced to an anthropomorphic pencil, induced to fall in love with it, and then told by a machine that we need to pay $10/mo or the pencil gets it.

And there’s going to be a LOT of people falling in love with super-advanced chat bots that don’t experience the world in any way.

People fall in and out of love all the time. I think the real consequence of online digital romance - particularly with some shitty knock off AI - is that you’re going to have a wave of young people who see romance as entirely transactional. Not some deep bond shared between two living people, but an emotional feed bar you hit to feel something in exchange for a fee.

When the exit their bubbles and discover other people aren’t feed bars to slap for gratification, they’re going to get frustrated and confused from the years spent in their Skinner Boxes. And that’s going to leave us with a very easily radicalized young male population.

Everyone interacts with the world sooner or later. The question is whether you developed the muscles to survivor during childhood or you came out of your home as an emotional slab of veal, ripe for someone else to feast upon.

And that’s going to leave us with a very easily radicalized young male population.

I feel like something similar already happened

next you’re going to tell me the moon doesn’t have a face on it

It’s clearly a rabbit.

Were people maybe not shocked at the action or outburst of anger? Why are we assuming every reaction is because of the death of something “conscious”?

Right, it’s shocking that he snaps the pencil because the listeners were playing along, and then he suddenly went from pretending to have a friend to pretending to murder said friend. It’s the same reason you might gasp when a friendly NPC gets murdered in your D&D game: you didn’t think they were real, but you were willing to pretend they were.

The AI hype doesn’t come from people who are pretending. It’s a different thing.

For the keen observer there’s quite the difference between a make-believe gasp and and a genuine reaction gasp, mostly in terms of timing, which is even more noticeable for unexpected events.

Make-believe requires thinking, so it happens slower than instinctive and emotional reactions, which is why modern Acting is mainly about stuff like Method Acting where the actor is supposed to be “Living truthfully under imaginary circunstances” (or in other words, letting themselves believe that “I am this person in this situation” and feeling what’s going on as if it was happenning to him or herself, thus genuinelly living the moment and reacting to events) because people who are good observers and/or have high empathy in the audience can tell faking from genuine feeling.

So in this case, even if the audience were playing along as you say, that doesn’t mean they were intellectually simulating their reactions, especially in a setting were those individuals are not the center of attention - in my experience most people tend to just let themselves go along with it (i.e. let their instincts do their thing) unless they feel they’re being judged or for some psychological or even physiological reason have difficulty behaving naturally in the presence of other humans.

So it makes some sense that this situation showed people’s instinctive reactions.

And if you look, even here in Lemmy, at people dogedly making the case that AI actually thinks, and read not just their words but also the way they use them and which ones they chose, the methods they’re using for thinking (as reflected in how they choose arguments and how they put them together, most notably with the use of “arguments on vocabulary” - i.e. “proving” their point by interpreting the words that form definitions differently) and how strongly bound (i.e. emotionally) they are to that conclusion of their that AI thinks, it’s fair to say that it’s those who are using their instincts the most when interacting with LLMs rather than cold intellect that are the most convinced that the thing trully thinks.

i mean, i just read the post to my very sweet, empathetic teen. her immediate reaction was, “nooo, Tim! 😢”

edit - to clarify, i don’t think she was reacting to an outburst, i think she immediately demonstrated that some people anthropomorphize very easily.

humans are social creatures (even if some of us don’t tend to think of ourselves that way). it serves us, and the majority of us are very good at imagining what others might be thinking (even if our imaginings don’t reflect reality), or identifying faces where there are none (see - outlets, googly eyes).

Seriously, I get that AI is annoying in how it’s being used these days, but has the second guy seriously never heard of “anthropomorphizing”? Never seen Castaway? Or played Portal?

Nobody actually thinks these things are conscious, and for AI I’ve never heard even the most diehard fans of the technology claim it’s “conscious.”

(edit): I guess, to be fair, he did say “imagining” not “believing”. But now I’m even less sure what his point was, tbh.

My interpretation was that they’re exactly talking about anthropomorphization, that’s what we’re good at. Put googly eyes on a random object and people will immediately ascribe it human properties, even though it’s just three objects in a certain arrangement.

In the case of LLMs, the googly eyes are our language and the chat interface that it’s displayed in. The anthropomorphization isn’t inherently bad, but it does mean that people subconsciously ascribe human properties, like intelligence, to an object that’s stringing words together in a certain way.

Ah, yeah you’re right. I guess the part I actually disagree with is that it’s the source of the hype, but I misconstrued the point because of the sub this was posted in lol.

Personally, (before AI pervaded all the spaces it has no business being in) when I first saw things like LLMs and image generators I just thought it was cool that we could make a machine imitate things previously only humans could do. That, and LLMs are generally very impersonal, so I don’t think anthropomorphization is the real reason.

Most discussion I’ve seen about “ai” centers around what the programs are “trying” to do, or what they “know” or “hallucinate”. That’s a lot of agency being given to advanced word predictors.

That’s also anthropomorphizing.

Like, when describing the path of least resistance in electronics or with water, we’d say it “wants” to go towards the path of least resistance, but that doesn’t mean we think it has a mind or is conscious. It’s just a lot simpler than describing all the mechanisms behind how it behaves every single time.

Both my digital electronics and my geography teachers said stuff like that when I was in highschool, and I’m fairly certain neither of them believe water molecules or electrons have agency.

TIMMY NO!

“We are the only species on Earth that observe “Shark Week”. Sharks don’t even observe “Shark Week”, but we do. For the same reason I can pick this pencil, tell you its name is Steve and go like this (breaks pencil) and part of you dies just a little bit on the inside, because people can connect with anything. We can sympathize with a pencil, we can forgive a shark, and we can give Ben Affleck an academy award for Screenwriting.”

~ Jeff Winger

I used to tell my kids “Just pretend to sleep, trick me into thinking you are sleeping, I don’t know the difference. Just pretend, lay there with your eyes closed.”

I could tell, of course, and they did end up asleep, but I think that is like the Turing test - if you are talking to someone and it’s not a person but you can’t tell, from your perspective it’s a person. Not necessarily from the perspective of the machine, we can only know our own experience so that is the measure.

Wait wasn’t this directly from Community the very first episode?

That professor’s name? Albert Einstein. And everyone clapped.

Yes it was - minus the googly eyes

Found it

https://youtu.be/z906aLyP5fg?si=YEpk6AQLqxn0UP6z

Good job OP. Took a scene from a show from 15 years ago and added some craft supplies from Kohls. Very creative.

Or the professor saw the scene, thought it was instructive, and incorporated it into his lectures lol

Only purely original jokes/rhetorical devices are allowed! /s

That professor’s name? Albert Einstein

Do we have a “NothingEverHappens” community somewhere on Lemmy, yet?

RIP Timmy

We barely knew yeWe met you only just at noon, A friend like Tim we barely knew. Taken from us far too soon, Yellow Standard #2.

torn by fingers malcontent, pink eraser left unspent

Here he lies, wasted, broken. No words or art, just a token. Could have helped to make one smarter, now is nothing but a martyr.

Alan Watts, talking on the subject of Buddhist vegetarianism, said that even if vegetables and animals both suffer when we eat them, vegetables don’t scream as loudly. It is not good for your own mental state to perceive something else suffering, whether or not that thing is actually suffering, because it puts you in an an unhealthy position of ignoring your own inherent sense of compassion.

If you’ve ever had the pleasure of dealing with an abattoir worker, the emotional strain is telling. Spending day after miserable day slaughtering confused, scared, captive animals until you’re covered head to toe in their blood is… not good for your mental health.

The Texas Chainsaw Massacre often gets joked about because this “based on a true story” wasn’t in Texas and didn’t involve a chainsaw and wasn’t a massacre. But what it did get right was how Ed Gein, the Plainfield Butcher, had his mind warped by decades of raising and killing farm animals for a living.

Anthropomorphism is one hell of a drug