Excerpt from the most interesting bit:

Architecturally this is interesting. Because if we are going to have AIs living inside our apps in the future, apps will need to offer a realtime NPC API for AIs to join and collaborate – and that will look very unlike today’s app APIs. And how will we get the visual training data for AI models to connect together what the user is seeing and the machine API? Questions for the future.

Anyway: I want to show you where I ended up.

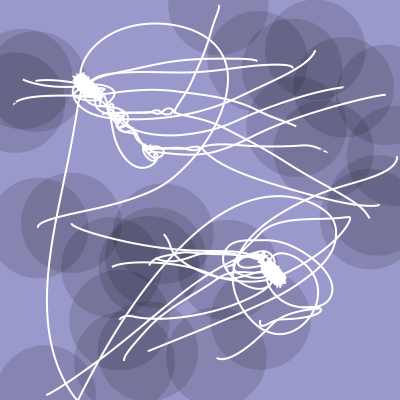

Here’s my dolphin NPC PartyKit sketchbook. I posted this just today.

You’ll see three GIFs:

You create a “pool” or a cursor park (“a space on a Google Docs page designated for placing your mouse cursor when you’re not actively editing the document”) or (as I call it) an embassy on the whiteboard. The NPCs need somewhere to hang out when they’re idle. Then you summon your NPCs from the comms walkie-talkie on the page.

NPCs can accept commands! From your walkie-talkie, you can tell the poet NPC to venture out of its embassy to write a poem. So it does that, as you can see, leaving a haiku on the whiteboard, then returns home.

NPCs can be proactive! The painter dolphin likes to colour in stars. When you draw a star, the painter cursor ventures out of the embassy and comes and hovers nearby… “oh I can help” it says. It’s ignorable (unlike a notification), so you can ignore it or you can accept its assistance. At which point it colours the star pink for you, then goes back to base till next time.

Check out the movies on that page. It’s all working code! I can interact with these dolphin-cursor-NPCs. Let me tell you, it is uncanny to see a machine-driven cursor. It doesn’t move right.

Look yes it’s ridiculous, and these are woefully simple, toy interactions.

But, but, and, I learnt a ton.