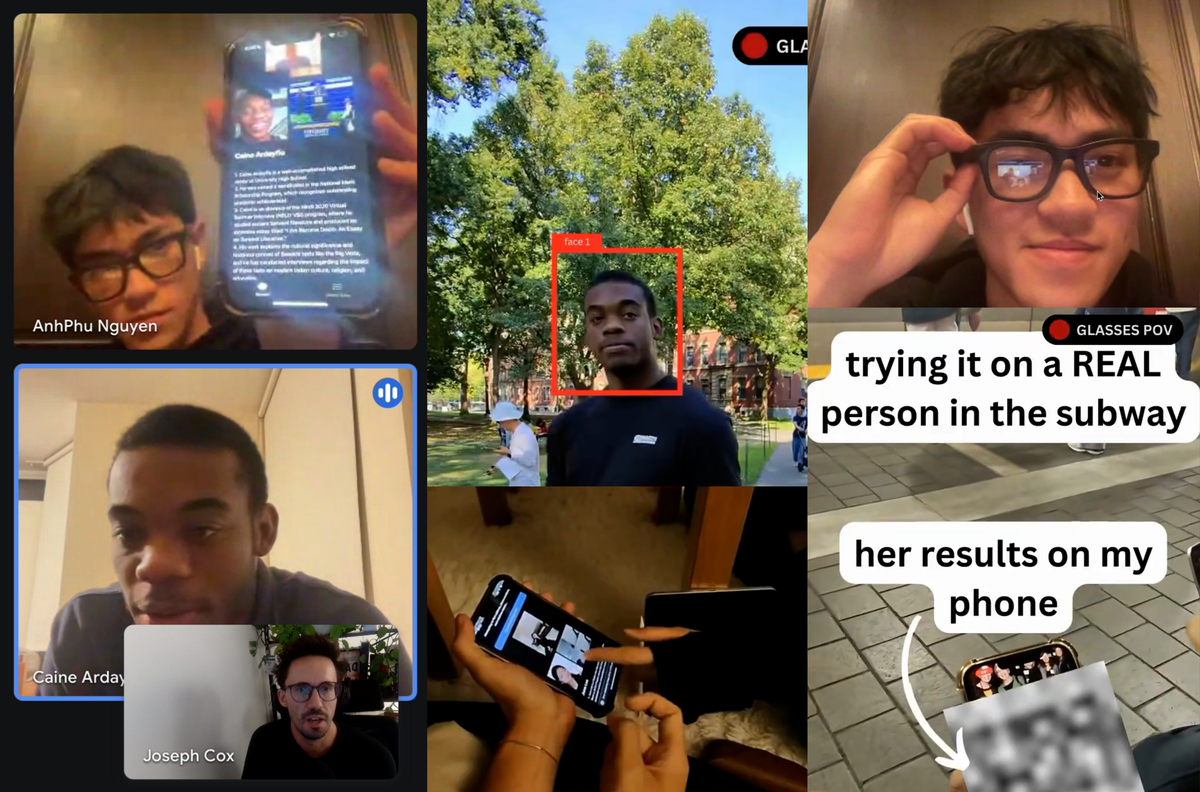

What’s actually making this possible is the PimEyes database. It’s insane that there is a facial recognition database that can be accessed like this. I doubt this is anywhere near legal in the EU.

The media keeps concentrating on Meta and the researchers but you can do the same with phone cameras, doorbell cameras, etc.

Yeah pimeyes absolutely needs to be shut down and laws need to be in place to protect private citizens from having their information sharable and searchable without their explicit consent. “Publicly available information” is always the line people use to defend these services. I’m arguing that our modern capabilities needs to be adjusted for. Things shouldn’t be so publicly accessable in the first place and personal data aggregation should be a much more vetted and potentially licensed business. Can we talk about what other purpose these facial recognition databases serve other than to stalk, expose, or extort people? If they required proof of identity and only allowed searches of your own face then I could understand the value.

I listened to the 404 Media podcast about this yesterday and the author argues that the subject of the article’s ire is intended to be the researchers themselves. Specifically, the bad ethics of testing this integration on non-consenting individuals (even though it was seemingly done with good intent).

Luckily the researchers realized what the fuck they had just made and pivoted the project to being about how to break the integration (ie: opt out of facial recognition systems and freeze your credit score).

Isn’t that why Google glass was cancelled

As much as i hate meta and the rest, this is really a personal failure. Everyone knew that this would happen and everyone kept uploading all their unsecured biometric info to the public internet. This would be feasable, no matter how cool and open social media platforms are.

Its not solveable by any other means than not publishing the data in the first place. Getting existing biometric scramblers for image and audio data into the hands of the public is the big first step that would be necessary to solve this.

No, you can address this through laws and legislation. You literally just ban people from amassing personal information on other people like Europe is doing.

Banning things doesnt stop people from doing those things. You dont stop locking your bike/car just because theft is illegal. Other countries governments could still use it, criminals could use it, your own countries agencies could use it because they might be exempt from certain laws.

Yes it should be outlawed but thqts only half the solution.

True, but corporations are the most clear and immediate threat and making it sufficiently (!) expensive for them does discourage bad behaviour.

it does not discourage anything. illegally designed cookie notices? the dozen tracking providers on all the websites? digital public passport passes that track your habits, but never told you about it, not even at purchase?

the law wont save you. laws will prevent no one from doing this, just like outlawing encryption couldn’t prevent decentralized encrypted messengers from being used.

as a European, I don’t think EU laws have helped anything in this. if anything they have only helped to make websites a little more honest in what they do. but even their cookie notices and tracking agreement questions are most often illegal, filled with dark patterns prohibited by GDPR. and who the fuck cares?

the law wont save you. laws will prevent no one from doing this, just like outlawing encryption couldn’t prevent decentralized encrypted messengers from being used.

An illegal actor could still comb the internet and create a private face recognition db, but they would be taking on risk, paying substantial infratstructure costs, would not be able to make it widely available for fear of being caught, and would have limited options for actually making any real money from it.

It would completely prevent say, your average stalker, or jilted ex, or non techy weirdo from.being able to access it, and it would prevent corporations from spending all their time building business around privacy invasion.

An illegal actor could still

and that’s all legality on the internet can achieve: calling these illegal actors. just like if I would be called an illegal actor if I kept using Matrix and Signal after (and if) chat control has passed.

but they would be taking on risk,

have you seen this article by Proton, showing how much big tech pays in penalties for their illegal acts?

it does not matter.

paying substantial infratstructure costs,

piece of cake for those who already have it. I’m but only talking about traditional big tech, but also other large companies like clearview ai.

would not be able to make it widely available for fear of being caught, and would have limited options for actually making any real money from it.

Except if they are

- in a non-EU country, because EU has no power outside it

- having business with police forces and such, because then it can easily get an exception or get hidden or handwaved away

It would completely prevent say, your average stalker, or jilted ex, or non techy weirdo from.being able to access it, and it would prevent corporations from spending all their time building business around privacy invasion.

only if it gets found out, and if the person doing it does nothing to hide itself. I don’t think this would be effective.

what I think though that this could be used as another reason to support chat control, and automated surveillance with it, but maybe not even at the chat system, but at the camera software or operating system level

Only to an extent. Facial recognition photo scrubbing across the internet is a little tough to defend against, even for those who are privacy and security minded. Good software will find you in the background of photos. It’ll have your location and the time taken if the photos are geotagged too.

We also don’t have control over automatic number plate recognition, surveillance cameras, etc.

I, for one, have consistently avoided publishing photos of myself on the Internet my entire life (and I’ve been online since the '90s, so I was really ahead of the curve on that), and even shy away from being in other people’s photos as much as possible (sometimes you can’t avoid it without consequences, such as if it’s a driver’s license photo, or imposed by your employer, or the news covering an event you’re participating in, or that sort of thing). Even then, I still have very little confidence that I’ve managed to stay out of these sorts of facial recognition databases.

Exactly. I’m in the same boat as you. The bulk of my exposure was in bands on MySpace. I was practically anonymous by the time Facebook became popular.

I’m still certain I’m in hundreds of other people’s pictures.

Im talking about apps (optimally your main camera app too) needing to have built in biometric fuzzing. Phones (by default) just shouldnt be capable of creating pictures that can be used for biometrics. Camera apps for this already exist but nobody uses them.

Ofcourse the existing pictures are already on the internet but thats not a reason to not change course. The sooner we stop supplying them data, the worse their detection system will be.

Simply not uploading pictures of yourself at all is the best but maybe thats too hard for some people.

Sure, but that still doesn’t change that you don’t have control over other people’s pictures.